Every forward-thinking tech company has to plan for future growth across all aspects of its software development processes. Whether you are building software infrastructure for an internal enterprise system or customer-facing product, your company needs to choose algorithms, network protocols, and applications that can accommodate new changes.

But before finalizing your decision regarding any ongoing or future application building initiative, you need to determine the scalability model that will withstand database expansion or increased user activity.

In this article, we define scalability from a software standpoint. We also explore how software scalability affects application modernization as well as how your business can benefit from it.

In software development, scalability is a system’s capacity to adapt to precedented as well as unprecedented increases in workload. For example, the scalability of a database determines how it can adapt to new users joining the system or how it handles integrations.

Scalability in software development covers performance, maintenance, availability, and expenditure. In essence, every company can define its parameters for scalability based on specific targets. For this article, we’ll focus on performance, since it is an all-encompassing parameter that affects productivity, and subsequently, conversion.

Software scalability analysis (scalability testing) is the process of testing the performance of a system or software application. This procedure determines how the system or application responds to structural changes that increase the workload.

Similar to performance, software scalability is quantifiable. You just need to establish the relevant performance criteria or system attributes to track.

Here are the scalability testing attributes to consider:

Response time refers to the time that elapses between a user’s request and the application’s response — the lower the response time, the better the application’s performance. On average, users can tolerate a 5-second response time. Anything above that harms your app’s performance and hampers the user experience.

This attribute measures the capacity of memory and network bandwidth in use when the application is in full operation.

Throughput is a measurement unit that tracks the number of requests processed within a specific time interval. For instance, web applications measure throughput by the number of queries received or processed in the specified time frame.

This attribute determines the price of every transaction carried out by the application.

Note: Tools like Microsoft’s Azure Application Insights and Amazon’s CloudWatch can help you test scalability of your system based on the attributes mentioned above.

Now that you know what software scalability analysis entails, let’s focus on how it can help startups and SMBs improve their software development processes.

In 2008, British Airlines canceled over 34 flights due to a software glitch. According to reports from Heathrow’s Terminal 5, a new baggage handling system had failed, leaving passengers stranded at baggage claim. Apparently, the newly-released software couldn’t handle the real-life workload, despite extensive preliminary testing and simulations.

Similar disruptions occurred in the same airport and to the same airline in 2019 and 2020, respectively, all caused by software scalability issues. Talk about not learning from mistakes.

But this incident is not limited to air travel; mishaps like these are common in every industry.

Fashion magazine Elle reported that Goat’s fashion website crashed after Meghan Markle appeared in one of their dresses. Goat’s servers were unable to handle the instantaneous influx of new shoppers.

Although these companies addressed these issues retroactively, the cost to their respective businesses was massive.

However, your business can implement scalable software development practices to protect you from these unprecedented interruptions by spotting potential “red flags” beforehand.

Here are signs that it’s time to scale:

Due to compatibility issues, businesses that rely on legacy systems often struggle to integrate new technologies and automation. As a result, upgrading the software becomes a tedious — if not impossible — task to accomplish.

If you are struggling to integrate automation into the software program, sytem modernization services is often the best option.

If your system is pumping out error messages — and frequent repairs are now part of the daily routine — scaling should top your company’s to-do list.

What are the reasons for these frequent error reports?

For starters, security issues and internal vulnerabilities like buggy code and conflicting codebases might be disrupting the system. Not only that, any technical failure in monolithic systems affects the entire application — if one module fails, the whole system must be fixed to get things running at a regular pace.

Although massive corporations can handle massive spending to address scalability problems, startups and SMBs need to explore ways to limit expenditure while maintaining optimum product performance.

If you are spending a fortune just to fix a recurring issue — let’s say you are always paying for server updates — then it is time to find a scalability model for your system.

And that brings us back to application modernization. Most of the time, modernizing your legacy systems can help you avoid these red flags and save maintenance costs. And with detail-oriented code review practices — covering UI, architecture, and testing — you can spot avenues to modernize and scale your system.

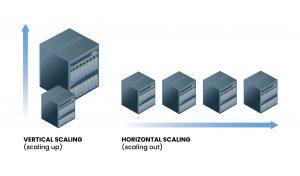

Software scalability can take different forms depending on the affected part of the system. Here are the two most common software scalability models.

Horizontal scaling (scaling out) involves adding nodes to (or removing nodes from) a system. A node, in this scenario, could be a new computer or server. Essentially, adding more servers to an existing application means that you are scaling it out.

Massive social networks rely on horizontal scalability to increase the capacity to scale workloads and share processing power. Horizontal scaling also lets you keep your existing computing resources online while adding more nodes.

However, adding multiple nodes increases the initial costs, since the system becomes more complex to maintain and operate.

…

…

Vertical scaling (scaling up) involves adding resources to a single node instead of the entire system. When you scale up your application, you increase its capacity and throughput.

Not only that, vertical scaling gives you massive computing power and allows data to live on a single node, albeit within the limits of your server.

One major drawback to the vertical scalability model is that it follows Amdahl’s law, which states that “the overall performance improvement gained by optimizing a single part of a system is limited by the fraction of time that the improved part is actually used.”

In essence, vertical scalability gives you more computing power which diminishes over time due to limited usage.

Another major drawback is that vertical scaling exposes your system to a single point of failure, which places your data at risk when failures occur.

Despite the drawbacks of vertical scalability stated above, startups and SMBs can still benefit from both approaches.

Advantages of horizontal scaling:

Advantages of vertical scaling:

You can also combine the best of both scalability models through autoscaling — a scalability model that monitors changes in your application and automatically adjusts capacity to maintain performance at optimal levels.

In cloud computing, world-renowned vendors like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform offer features like Elastic Load Balancing (ELB) to help you autoscale your software.

There is no universal formula to scaling applications, but we’ve gathered some of the best practices to implement in ongoing and future projects. You can even use them retroactively when modernizing legacy applications.

Before analyzing your application’s scalability potential, outline the fundamental values to measure. Attributes like throughput, resource usage, and response time should top the list of scalability metrics for every software.

Data from the Hosting Tribunal shows that the public cloud service market will reach 620 billion USD by 2023, with 66% of enterprises already running a central cloud team. Besides, cloud-based storage offers more flexibility than local storage. With that in mind, moving from locally-hosted servers to those that are cloud-based will make your system easier to scale.

A cache stores pre-computed results that the application can access without having to process those requests again. As a result, caches allow your application to retrieve data from the database, which reduces the overall response time.

Some companies use monolithic application architectures for services like business logic, UI, and data access layers. However, this architecture limits the application’s scalability to only one direction — up. But with a microservices architecture — used by 63% of startups and SMBs — you can host these services independently. This approach to scaling software applications ensures flexibility and reduces maintenance costs.

Software scalability determines how your application can adjust to an increase in workload while maintaining the same level of performance. These days, you can analyze your application’s scalability by defining attributes to measure. You can also scale your software program horizontally or vertically. Alternatively, you can adopt an autoscaling model to handle scaling operations manually.

At IntexSoft, we can help you identify the best software scaling model for your application. We also perform full-scale legacy system modernization services, including complete or partial cloud migration.